Embracing the Future: Enjoying Music Creation with AI and New Technology

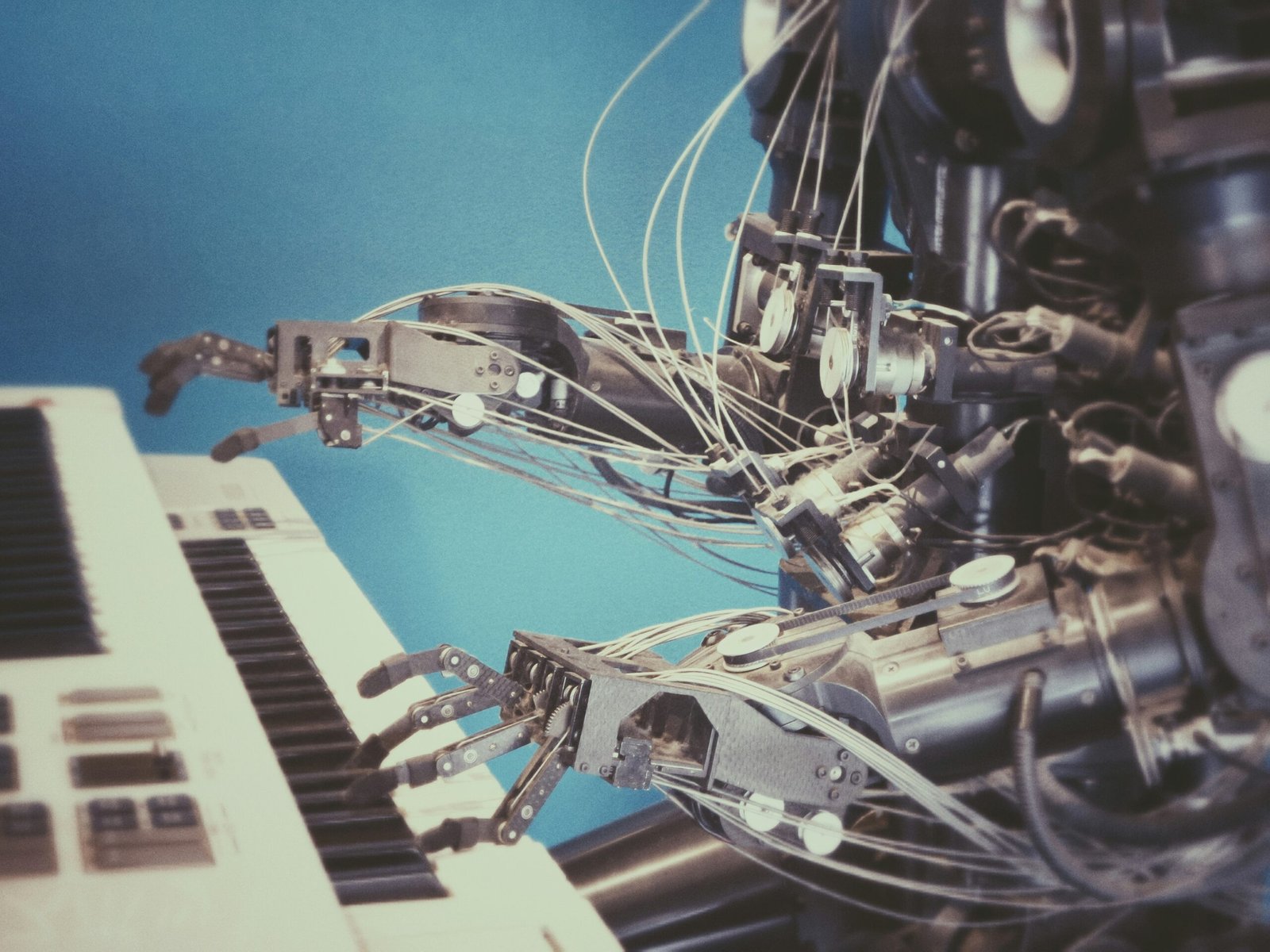

The Evolution of Music Creation: AI’s Role in Modern Composition

The landscape of music creation has undergone significant transformation over the decades, evolving from traditional methods to the incorporation of cutting-edge technologies such as artificial intelligence (AI). Historically, music composition relied heavily on the skills of musicians who mastered instruments and theoretical concepts to create harmonious sounds. However, the emergence of digital technology in the late 20th century opened the gates to novel ways of producing and composing music.

With the advent of AI, musicians now have access to a suite of powerful tools that enhance the creative process. AI music generation software, like OpenAI’s MuseNet and AIVA, demonstrates how technology can assist in composing unique pieces of music across various genres. These tools not only enable seasoned musicians to experiment with new ideas without the limitations of traditional formats, but they also empower aspiring musicians who may lack formal training in music theory.

Collaboration between human creativity and AI technologies brings forth a plethora of advantages. For instance, these systems can analyze vast data sets, allowing them to generate original compositions by learning from historical music trends and styles. Artists such as Taryn Southern have successfully embraced AI in their work, leveraging these tools to produce innovative and diverse tracks that combine human input with machine-generated elements, thus enriching the overall musical landscape.

Moreover, AI’s role in music creation has implications for accessibility and diversity within the industry. By making composition tools available to a wider audience, AI lowers the barriers to entry, encouraging individuals from various backgrounds to express themselves through music. This democratization of music creation can lead to a more inclusive representation of voices and stories in the sonic world.

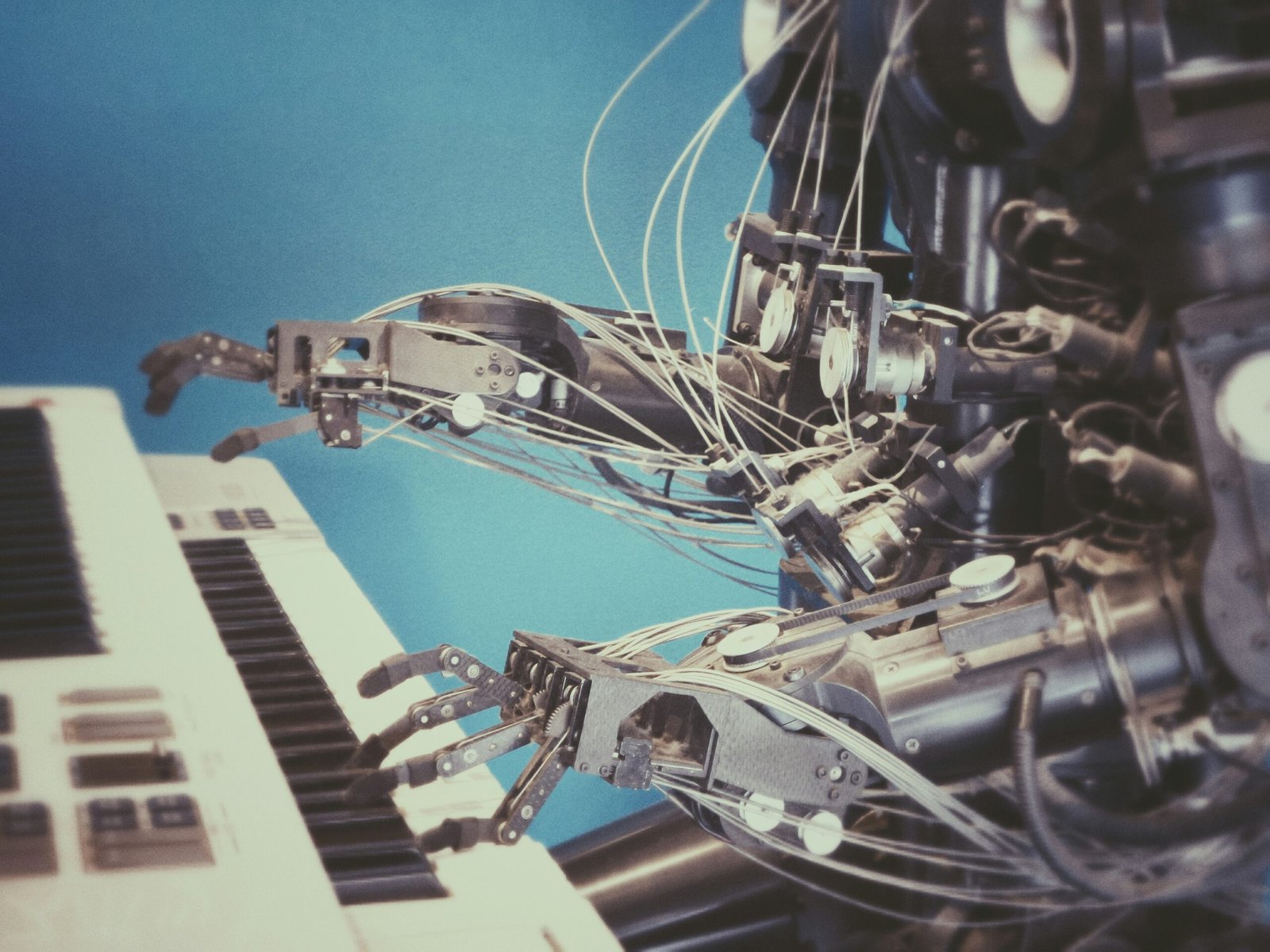

Hands-On with AI Music Generation: Tools and Techniques to Get Started

As music creation increasingly embraces artificial intelligence (AI), various tools are now available, catering to a range of users from novices to experienced musicians. Many platforms focus on simplifying the music composition process, enabling users to generate original melodies, harmonies, and rhythms with ease. Some popular AI music generation tools include OpenAI’s MuseNet, AIVA, and Jukedeck. Each service offers unique features that may appeal to different users.

For beginners, platforms like Amper Music and Soundraw provide user-friendly interfaces, allowing non-musicians to compose music without prior knowledge of music theory. They often include customizable templates and preset styles, making it simple for users to begin experimenting with sound. On the other hand, more advanced musicians may appreciate tools like Google’s Magenta, which focuses on enabling creative exploration through extensive coding options and customization.

To integrate AI-generated music into live performances or personal recordings, users should follow a few key steps. Start by choosing the right AI tool that fits your style or project. Once you have generated a piece, don’t hesitate to modify it. Many platforms allow users to tweak AI output, adding personal touches to make the piece uniquely yours. Additionally, consider layering AI-generated tracks with live instrumentation, which can enhance depth and character in your music.

While AI music generation presents numerous possibilities, users may encounter challenges such as a lack of creative control or the risk of music sounding generic. To tackle these issues, it’s essential to actively engage with the output, using it as a base for inspiration rather than a final product. Experimentation with different AI settings, collaboration with human musicians, and an eagerness to explore various genres can significantly enhance the creative process.